OpenCog discussion of impact of

thread-safety and distributed processing on performance (and code structure):

On Sunday, July 6, 2014 5:51:36 PM UTC-4:30, linas wrote:

On 6 July 2014 11:51, Ramin Barati

<rek...@gmail.com> wrote:

Anyway, a few years ago, the AtomSpace used the proxy pattern and it was a performance disaster. I removed it, which was a lot of hard, painful work, because it should never have been added in the first place. Atomspace addnode/link operations got 3x faster, getting and setting truth values got 30x faster, getting outgoing sets got 30x faster. You can read about it in opencog/benchmark/diary.txt

I can't even imagine what use has the Atomspace for the proxy pattern.

Ostensibly thread-safety, and distributed processing. Thread-safety, because everything was an "atom space request" that went through a single serialized choke point. Distributed processing, because you could now insert a zeromq into that choke point, and run it on the network. The zmq stuff was even prototyped, When measured, it did a few hundred atoms per second, so work stopped.

To me, it was an example of someone getting an idea, but failing to think it through before starting to code. And once the code was written, it became almost impossible to admit that it was a failure. Because that involves egos and emotions and feelings.

--linas

I think designing most parts of the software around data grid and compute grid constructs would allow:

- intuitive coding. i.e. no special constructs or API, just closures and local data, and closures are still cheap even when lots of iterations and no thread switching. e.g.

Range(0..100000).foreach { calculateHeavily(it); }.collect( sum )

a nice side effect of this is, a new member or hire can join a project and ideally, not having to learn the intricacies of messaging & multithreading plumbing, there's already too much logic to learn anyway without adding those glue.

- practical multithreading. I'm tempted to say painless multithreading :) multithreading becomes configuration, not a baked in logic that's "afraid" to be changed (in the sense that, while make a singlethreaded code to multithreaded takes time, I think it takes even more time to make it work right + no race conditions + avoid negative scaling.. then when all else fails you return it back to the original code, while other developments are done concurrently)

- messaging. even the simple code above implies messaging, i.e. logically it is sending "int i" into the closure. at runtime it can be singlethread, multithread, or multinode. if calculateHeavily is in nanoseconds then it's pointless to use multithread. but if calculateHeavily takes more than 1 second multinode is probably good.

- data affinity. the data passed to the closure doesn't have to be "data"/"content", it can be a key, which the closure can then load locally and process and aggregate.

findAtomsWhichAre(Person).foreach( (personId) -> { person = get(personId); calculateHeavily(person); }.collect( stats )

I haven't seen the ZeroMQ implementation of AtomSpace, but I'm suspecting it is (was?) a chatty protocol, and would've been different if data affinity was considered. I only ever used AMQP, but I think both ZeroMQ and AMQP are great to implement messaging protocol instead of scaling, unless explicitly designed as such like how Apache Storm uses ZeroMQ.

- caching... consistently & deterministically. one way to reduce chattiness is to cache data you already have, that can be reused on next invocations (which hopefully many, otherwise too many cache misses will be worse). the problem is cache becomes stale. the solution is to use the data grid model, meaning the cache is consistent at all times. While this means there is a bit more coupling between cache and database, the programming model stays the same (as point #1) and no need for stale cache checks.

- caching... transactionally. manually implementing atomicity is hard in using messaging/RPC/remote proxy, or even basic multithreading. a data grid framework allows transactional caching, so

ids.foreach { a = get(id) -> process(a) -> put(id, a) }

would not step on some other operation.

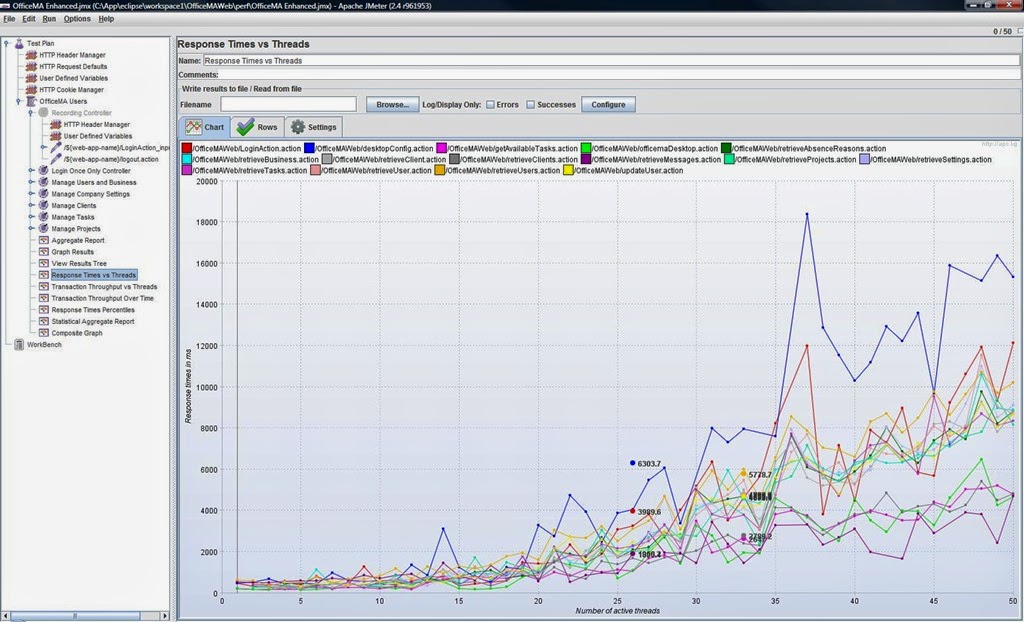

- performance testing for several configurations. if there are performance unit tests for a project, these can be in multiple configs: 1 thread; n threads; 2 nodes × n threads.

this ideally achieves instant gratification. if a performance unit test has negative scaling, you can notice it earlier. and if it does approach linear scaling, congrats & have a beer :D

- bulk read & write. related to #5, if there are lots of scattered writes to database, a cache would improve this using write-through, while maintaining transactional behavior. instead of 100 writes of 1 document each, the cache can bulk-write 1 database request of 100 documents. you may let the framework do it or may code bulk write manually in certain cases, there's the choice.

- bulk messaging. related to #3 and #4. a straightforward messaging protocol may divide 100 operations into send 50 messages to node1 and 50 messages to node2, which may be significant overhead. a compute grid can divide 100 operations into 2 messages: 1 message of 50 operations to node1 and 1 message of 50 operations to node2.

- avoid premature optimization, while allowing both parallelization and optimization. related to point #7, since you know you can change config at any time, if you notice negative scaling you can simply set the config for that particular cache or compute to single-thread or single-node. Implementing #2 + #3 manually sometimes actually hinders optimization.

While my reasons above pertain to performance, I'm not suggesting considering performance while coding, but I do suggest considering a programming model which allow you to implement performance tweaks unobtrusively and even experimentally while retaining sanity. (that last part seems to be important at times :)

For example, using GridGain one uses closures or Java8 lambdas or annotated methods to perform compute, so things aren't that much different. To access data one uses get/put/remove/queries abstraction and you usually abstract these anyway, but now you have the option to make these read-through and write-through caches instead of direct to database. Data may be served from a remote/local database, a remote grid node, or local memory, this is abstracted. The choice is there, but it doesn't have to clutter code, and can be either implemented separately a in different class (Strategy pattern) or configured declaratively.

For Java it's easy to use GridGain, I believe modern languages like Python or Scheme also have a way to achieve similar programming model. Although if for C++ then I can't probably say much.

Personally I'd love for a project to evolve (instead of rewrite), i.e. refactoring different parts over versions while retaining general logic and other parts. This way not only retains code (and source history), but more importantly

team knowledge sharing. In a rewrite it's hard not to repeat the same mistake, not to mention the

second-system effect.

In my experience with

Bippo eCommerce, our last complete rewrite was 2011 when we switched from PHP to Java. From that to the present we did several major architectural changes, as well as frameworks: JSF to quasi-JavaScript to Wicket, Java EE to OSGi to Spring, Java6 to Java7 to Java8, LDAP to MongoDB, MySQL to MongoDB to MongoDB + PostgreSQL, and so on... sure we had our share of mistakes but the valuable part is we never rewrite the entire codebase at once, we deprecated and removed parts of codebase as we go along. And the team retains collective knowledge of the process, i.e. dependency between one library and another, and when we change one architecture the other one breaks. I find that very beneficial.